How to future-proof your career in the age of AI

Where can you add the most value when AI is outperforming us on more and more tasks?

👋 Hi, it’s Torsten. Every week or two, I share actionable advice to help you grow your career and business, based on operating experience at companies like Uber, Meta and Rippling.

If you enjoyed the post, consider sharing it with a friend or colleague 📩; it would mean a lot to me. If you didn’t like it, feel free to send me hate mail.

When you look at discussions around AI developments and their impact on jobs, there are two main camps that dominate the conversation.

Camp #1 is in denial. It sounds something like this:

“AI will never replace what I do. Real quality and craft will win against AI slop!”

Camp #2 are the doomsday prophets. They keep repeating versions of:

“We’ll all be out of jobs! In 5 years, there’s nothing AI can’t do. Better say ‘Please’ and ‘Thank you’ to ChatGPT to get on the good side of our new overlords!”

Neither extreme take is 1) accurate nor 2) helpful in my opinion.1

If you think AI will never replace what you do, you become complacent (which will actually make it more likely that AI will take your job)

If you think there’s no hope, you’re giving into a defeatist attitude — instead of seeing yourself as response-able, you are waiting for someone else (e.g. the government, or the AI labs) to do something.

Both attitudes are equally passive.

Look, I get it: The progress of AI is dizzying, and I’m not sure I am excited about a world where Clippy will eventually be smarter than me.

But denying or ignoring AI progress — or throwing up your hands in defeat — won’t help.

So, what can you do?

In my view, the only viable strategy is to proactively engage with AI, understand its capabilities and shortcomings, and lean into the things where you currently have an unfair advantage.

But what are those?

Where AI is at a disadvantage (for now)

First things first: I am not trying to predict the development of AI here; nobody can predict the future, and I have no insider information. Anything that AI can do today, whether at the level of an entry-level analyst or a seasoned expert, will only get better, and new capabilities will be added as well.

However, there are things where AI is at a structural disadvantage simply because it is, well, AI — something that (today) exists purely in the digital realm and relies on data we feed it. None of these obstacles are impossible to overcome, but models simply getting “smarter” won’t be enough; it would require very fundamental changes to how companies operate, and that will take time.

Based on that, and over a decade of experience seeing how work actually gets done in the real world at companies from startups to FAANG, I picked a few areas where I expect humans to continue to add a lot of value for at least the foreseeable future.

AI challenge #1: Limited data access & context

Generally, AI is good at things that it has a lot of training data on. And since the web is full of data on almost anything, AI is (becoming) good at a lot of things.

However, what AI is lacking is organization-specific context. Even if you gave it access to all your internal systems and documents: Anyone who has ever worked anywhere knows that a lot of important information and context only exist in people’s heads.

The problem is exacerbated by the fact that AI is not (currently) in the habit of asking a ton of questions to gather context. Even when you give custom instructions to do this, chatbots like ChatGPT will typically only ask for limited surface-level information before starting to generate a recommendation or deliverable.

And even if it did ask for more context: Who wants to answer a series of 57 questions in writing? And can you actually articulate everything that matters explicitly and concisely?

Now, the more we integrate AI into our daily work and the more tasks are handled (or supported and observed) by AI instead of humans, this data gap will decrease. But at least for now, this is putting AI at a disadvantage.

AI challenge #2: Not all work is digital (yet)

AI can execute a lot of tasks — writing code, creating visuals, drafting documents etc. — at tech companies well because deliverables are completely digital and execution can be done by one person (or AI agent) end-to-end.

However, the hard part of any large initiative at work isn’t this execution — it’s aligning different teams and keeping them in sync, getting executive buy-in, deciding ownership and securing resourcing etc.

The larger the company, the more important (and challenging) this is. And then there are roles that are almost exclusively based on human interaction — Sales, for example. As long as most decision makers are human, there will be a need for humans to influence them.

Even if AI can make convincing arguments on paper: A chat transcript doesn’t sell things, people do.

Again, if we ever reach a point where large chunks of companies are run by AI agents, then a lot of these human friction points (and the need for people to handle them) will disappear.

But as long as you have humans in key positions, the difference between a failed project and a success will be how well you navigate these situations.

AI challenge #3: There is no alpha in commoditized intelligence

Large financial returns aren’t generated by average companies, but by outliers. These companies did something differently than the competition; they took a different approach to building product, positioned themselves differently, unlocked novel distribution channels, or took an unusual approach to execution. Or they created an entirely new market altogether where others didn’t see an opportunity.

Similar to how in the stock market all public information is already priced in, leaving no opportunity to generate outsized returns from it, using the same publicly available AI tools as everyone else is unlikely to give you a competitive edge (unless you have large, unique datasets to train them on that others don’t have access to).

For some companies, that might be acceptable — in fact, “good, but average” results might be better than what they’re getting right now. But those that want to win in competitive markets will want an edge over others, and you don’t get that from putting things on the same autopilot everyone else is using.

It’s the same reason why you don’t outsource any core competencies like product strategy or marketing today, and why it makes more sense to bring in McKinsey to help cut costs instead of hoping they’ll create the next breakthrough product for you.

AI challenge #4: Accountability

Like it or not, executives love having a single person responsible for an area of the business or a critical initiative.

When they are not happy with the progress, they have someone they can drag into a meeting room and grill on turnaround plans.

But if ChatGPT messes up your product launch, what are you going to do? “Fire” ChatGPT and never use it again? Sue OpenAI? Or — god forbid — take responsibility yourself?

This will not necessarily protect entry-level IC positions, but it does suggest there will be a continued need for “managers” overseeing work (whether that’s done by humans or AI).2

What skills and traits provide a competitive edge in the age of AI?

Now, based on the above, let’s talk about what concrete skills you should focus on to maximize the value you bring to an organization.

In my view, it comes down to two key areas:

Having strong judgment and convictions

Being able to influence people to get stuff done

1. Having strong judgment and convictions

AI is good at solving somewhat straightforward problems with a clear right answer. Where a lot of the value lies (and will lie going forward), however, is in making good decisions in the face of incomplete data and ambiguous situations.

Even if AI gets better at this; if 1) everyone has access to the same AI solutions and 2) AI can’t really be accountable the same way a person can, then developing good judgment — and building a reputation around it — will continue to be a key differentiator.

You’ll need this both to handle things that executives are not comfortable handing off to AI as well as to critically evaluate AI outputs.

But what does good judgment actually look like?

In a nutshell, good judgment is about making good decisions, recommendations or assessments. To do this, you interpret hard facts and data through the lens of situational context and personal experience.

There are a few “ingredients” to this:

Knowing what “great” looks like (and why it’s great)

You can’t properly evaluate something if you haven’t seen examples of excellence in that area.

For example, if you want to judge whether an email drafted by AI (or your team) is good, you need to 1) have seen examples across the entire quality spectrum and 2) form an opinion on what makes some better than others (and a few much better than the rests).

This will always be subjective to a degree. Call it “taste”, if you like.

The best way to develop this is to work directly with, or for, people at the top of their craft. The second best way is to study their work and try to understand how they operate and think (e.g. through courses, podcasts, and newsletters).

Knowing what works and what doesn’t

The vast majority of AI models people use are trained on publicly available data.

The problem: Similar to how scientific journals only publish findings with statistical significance, companies mostly write about successful projects (and only the final approach that worked, not the 100 things they tried before that didn’t).

In addition, the crucial details and nuance that matter when you’re actually trying to operationalize it are left out.3 The only way to learn this is through hands-on experience.

Case in point: When I try to work with an AI like ChatGPT through complex problems like building a marketing attribution model, it gets the general approach 95% right. And even when asked about potential challenges, it does fairly well and rattles off most of the obvious ones — almost as if it has experience doing this.

But when I keep pushing, it becomes clear the whole thing isn’t “thought through”. It’s the kind of proposal you get from a new grad that has learned about a topic in school but has never actually done the work.

As long as you know what you’re doing, you can actually iterate your way to a decent result4, but if you just asked an AI to “run with it”, you’d get something half-baked that won’t hold up in the real world.

So, to build out a competitive edge, you need to:

Do the work yourself (i.e. don’t just outsource it to AI to make your life easy)

Work through all of the setbacks and challenges you’ll inevitably encounter

Over time, identify what approaches work and which ones don’t

Use those insights to pressure-test your own proposals and those of others (incl. AI)

If that sounds like a lot of work, that’s because it is. But unfortunately, there is no shortcut.

Knowing what drives business impact vs. is a waste of time

Execution isn’t the only blind spot AI has. Another area it (currently) shows poor judgment is when asked to evaluate whether a project idea is even worth pursuing.

Given its lack of data on what projects led to business impact vs. which ones went nowhere, it cannot give a robust assessment here.

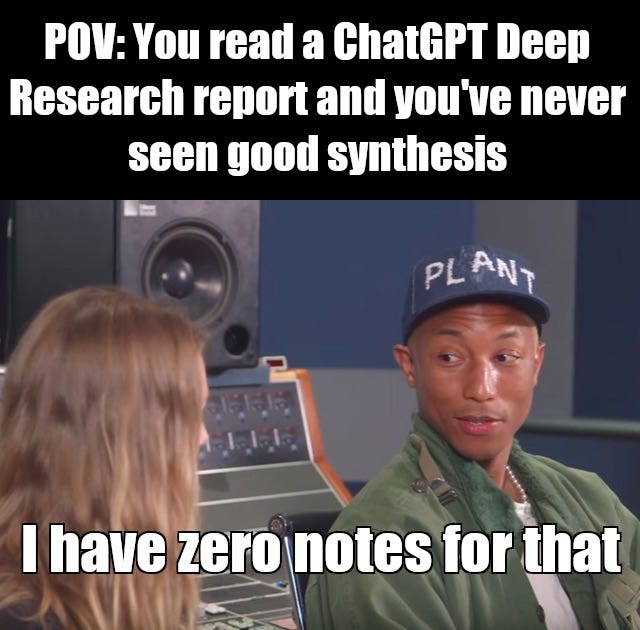

I keep a list of “Types of project to avoid” based on years of painful lessons, and I asked ChatGPT (4o and o3) to evaluate a few of them.

Given the project ideas I pitched all sounded reasonable at surface level, the responses I got were encouraging across the board, despite custom instructions to challenge me.

Just because a project feels important and interesting doesn’t mean you should do it, though. In fact, if people describe projects like this, I try to avoid them like the plague as it’s typically a sign that they don’t know exactly how it will drive impact (besides satisfying curiosity).

Being able to boil things down to the essentials

AI is getting better and better at giving detailed overviews or generating in-depth research reports.

However, it takes a lot of custom instructions, prompting and iteration to get to really clean, simple frameworks that boil complex decisions down to the essentials. Doing that, and knowing how much you can simplify without losing important nuance, requires judgment.

To practice this, first try to frame complex topics in the most simple terms possible. For example:

Framing decisions as a simple trade-off between two things

E.g. growth vs. profitability, speed vs. accuracy, etc.

Reducing an issue to two key dimensions and plotting it on a 2x2 matrix

E.g. grouping data science projects by business impact and effort

Expressing something as a function of a single (most important) factor

E.g. showing the ideal team structure based on team size

Then, see what decision you’d arrive at and, if you’re not happy with the result, add back nuance and complexity step-by-step until you reach a level that seems sensible.

Forming strong convictions

Finally, using your judgment, it’s important to form strong convictions.

Let’s say you’re working through a problem and, after a careful analysis, you reach your conclusion.

Now, to actually move forward, you’ll have to convince other people of your take. Throughout this process, people will challenge you, bring up additional considerations, and give their own opinions. Without strong convictions to guide you, you’ll be easily swayed by all this new input and start going in circles.

To be clear, I’m not saying you shouldn’t listen to feedback. But in ambiguous situations, you need someone to cut through the noise and advocate for something they strongly believe in.

This is what makes working with AI so frustrating at times. With no skin in the game and no real experience to draw from, it’s often a bit of a pushover. And while you can instruct AI to stand its ground and give strongly worded recommendations, it’s not the same as having actual convictions grounded in lived experience.

You can see the same difference between a consultant that doesn’t have to see the work through vs. the operator that actually needs to stand behind their recommendation and implement it.

2. Influencing people to get stuff done

As mentioned before, AI is rapidly getting better at creating outputs (code, documents, images etc.).

But the actual execution work is only a small portion of any project:

For example, when we built an account scoring model in my last job, writing the code for the first version of the model was actually the easy part. We spent the majority of the time 1) getting everyone to agree on what exactly to build and then 2) getting people across multiple teams comfortable with the outputs of the model.

These were also the phases of the project where it was most at risk of failing.

A model is completely useless if it’s not integrated into any critical business processes

So if the SDRs and AEs didn’t trust the model, they wouldn’t make decisions based on its output, and our work wouldn’t have driven any impact.

And at least for now, this process of getting people bought into your work and driving adoption is a deeply human challenge that requires an intentional approach and lots of context.5

Context about people

The more you know about how people operate, what they care about, and how they think, the easier it will be for you to work with them — and ultimately get their support or sign-off.

There are a few different aspects to this:

What different people respond to

It’s easy to fall into the trap of thinking that other people think like you. And that as a result, the best way to convince them is to give a presentation that you would find convincing.

However, I’ve learned that’s not true. For example:

When I worked at Rippling, our founder and CEO always wanted raw data in monthly updates so he could dig into the details and get unfiltered insights. He rejected any slides that had a narrative “spin” on the data

At Meta, on the other hand, everything that went to executives was packaged up into a clean narrative. Data was quoted selectively to support arguments in the summary, but no raw data tables were shared

The thing is: You can only get this context through direct exposure. These meetings are often not recorded or documented in detail, so an AI won’t have any data on this. You won’t either if you’re not in the room, so jump on any opportunity to listen in.

What incentives and priorities people have

People will ultimately always act in their own self-interest. As a result, understanding each person’s incentives is key so you can frame your proposals as a win-win (or at least make sure you don’t make their lives harder).

This goes beyond the metrics they are goaled on. For example, my team at Uber was officially responsible for both 1) keeping supply and demand balanced, and 2) staying within efficiency guardrails.

However, in practice, nobody cared if we spent more than budgeted. We did, however, get grilled whenever the marketplace was undersupplied — even if just for a few hours late at night.

As a result, we cared way more about reliability than efficiency. We didn’t say that out loud or documented it as a principle for the team, but everyone who’d been around for a while knew it to be true.

Context about process

How teams operate and work together

For almost any impactful project, you have to collaborate with other teams.

In addition to understanding their incentives and personal styles, you also need to understand how to work with them from a process perspective.

This goes way beyond official org charts (that an AI is aware of).

For example:

How do you get the data team’s support for the important experiment you want to run?

Do all requests have to be surfaced during quarterly planning, or are they flexible enough to adjust mid-quarter?

If they don’t have the bandwidth but you need the support, how do you escalate the issue?

Or:

How do the Marketing & Sales teams align their plans to make sure the overall Go-To-Market plan makes sense?

If the first version of the plans does not ladder up to the top-down goals from Finance, how do you iterate your way to a version that works?

I’d argue that given that execution is becoming commoditized, knowing how to navigate these processes will be one of the key differentiators of high performers going forward.

How decisions are made

When you’re trying to get a project off the ground, or move to the next phase, you often need to get approval from leadership.

Doing this well can speed up progress by weeks — if not months — and will massively reduce the risk that your project will just get “stuck” at some point and never see the light of day.

Since it’s such an important topic, I wrote a separate deep dive on it.

Interpersonal relationships and reputation

Finally, even if you know how to convince another team in theory and you are doing everything “right”, you’ll often hit a wall.

You submit a request and it gets deprioritized. You escalate, but it goes nowhere. The only reliable way to avoid this is to invest in strong relationships with your key partners that allow you to “cash in” on the credit and reputation you built with them when you need to.

The best way to build up goodwill with another team is to be helpful to them first. If you show that you’re willing to be pragmatic and help them out, circumventing official processes and red tape, then they’ll be much more likely to do the same for you.

Interestingly, since both gaining context and building strong relationships take time, it might incentivize people to stay in their roles longer than they do today (especially in more mature companies where things are more stable, both in terms of “how things work” as well as the people you work with).

What this looks like in practice

Besides the challenges discussed at the beginning of this post, there is also a much simpler reason why AI (currently) struggles with these things at work: They are hard.

But when someone does have strong judgment and is skilled at influencing stakeholders, what does that look like?

You get:

The Data Scientist that:

Can tell whether a stakeholder request will result in business impact instead of just a pretty dashboard that nobody looks at (and can successfully push back on things that would be a waste of time)

Knows what needs to happen for a predictive ML model to be trusted by business stakeholders

The Strategic Finance analyst that:

Knows that adding more features and complexity to the model will just be false precision and create more of a black box, which will erode trust in the forecast

Can pinpoint and challenge the weak points in the GTM plans based on experience and get teams to take on more realistic goals

The BizOps manager that:

Designs a planning and operating cadence that creates a helpful amount of structure and discipline without restricting operating flexibility too much

Gets Marketing and Sales to align on a joint attribution model despite different incentives and political tension between teams

The Legal team member that:

Has a good sense for how much exposure and regulatory risk is acceptable to take on in exchange for faster growth

While nobody can predict the future, none of these profiles are about to be replaced by AI tomorrow. So if nothing else, leaning into these skills extends the “runway” of your current career for a few years.

Closing thoughts: Being adaptable is key

In the areas I’m discussing above, you can still add value at the moment.

However, AI is developing rapidly and what is a human competitive advantage today might not be as valuable anymore a few months or years from now — at least from an employer’s perspective.

That means it’s unrealistic to expect that you’ll be able to keep doing your job the way you’ve done it in the past indefinitely.

Even if it doesn’t get replaced completely, it will keep changing, and if you want to be successful in that new world, you need to lean into the change and adapt.

💼 Featured jobs

Ready for your next adventure? Here are some of my favorite open roles, brought to you by BizOps.careers (sorted from early to late stage):

Vannevar Labs: Business Operations | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: Remote | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $150k - $170k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 5+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series B | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: General Catalyst, DFJ Growth

Talkiatry: Sr. Associate, Strategic Finance | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: Remote | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $140k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 4+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series C | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: a16z, General Catalyst

Mercury: Chief of Staff to the CEO | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: SF / NY / Portland / US remote / CAN remote | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $175k - $243k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 5+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: a16z, Sequoia, Spark Capital

dbt Labs: Marketing Strategy & Planning Manager | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: Remote | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $144k - $194k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 4+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: a16z, Sequoia, Google Ventures

OpenAI: Sales Strategy & Operation | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: SF / NY | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $265k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 7+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: Thrive Capital, Coatue, Khosla Ventures, Microsoft

Faire: Revenue Operations Associate | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: SF | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $88k - $121k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 2+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: Sequoia, Lightspeed, Founders Fund

Relativity Space: Business Operations Associate | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: Long Beach, CA | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $93k - $119k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 1+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: ICONIQ, Coatue, General Catalyst

Ethos Life: Strategy & Business Operations, Senior Associate / Associate | 🏠︎ 𝗟𝗼𝗰𝗮𝘁𝗶𝗼𝗻: Remote | 💰 𝗦𝗮𝗹𝗮𝗿𝘆: $85k - $138k | 💼 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲: 2+ YOE | 🚀 𝗦𝘁𝗮𝗴𝗲: Series D+ | 🏛️ 𝗜𝗻𝘃𝗲𝘀𝘁𝗼𝗿𝘀: Sequoia, Google Ventures, Accel

There’s also a third camp that says “AI won’t take your job — someone using AI will!”.

While this is supposed to be a more clever or nuanced prediction, it’s actually equally extreme and simplistic. The implicit assumption here is that there aren’t any sets of tasks (i.e. jobs) that AI will be able to successfully handle without any human intervention or supervision (which is already demonstrably false today).

And it also implies that simply being an “AI user” will help you future-proof your career. But AI tools are getting easier and easier to use, and AI features get added to all of the tools we use everyday. Most people will automatically become AI users simply by using software to do their job.

Whether that will be a particularly fulfilling type of role is another discussion.

Some individuals do write (or make videos) about painful lessons from failed projects, but due to NDAs, they also have to avoid including too many specifics

If you don’t mind training your AI replacement in the process

AI can help here (e.g. by brainstorming approaches), but it can’t do it well yet. Convincing people requires nuanced context and strong relationships, and works much better with a physical presence (have you ever tried convincing someone in a Slack debate?)

![Pin on [̲̅$̲̅(̲̅ SUCCESSION )̲̅$̲̅] Pin on [̲̅$̲̅(̲̅ SUCCESSION )̲̅$̲̅]](https://substackcdn.com/image/fetch/$s_!yQDr!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb0e1f2d9-5cab-4e4d-bdaa-cb919006aa45_500x324.gif)

Thank you for this level headed take. I tend to alternate between the two attitudes you describe in the beginning depending on my mood 😄 but the reality is probably closer to what you describe

Another great read.

Thank you Torsten!